Deployment Options

For self hosted solutions there are 2 basic deployment options; Ansible(a style we’re currently using) or deploying through Docker images.

- Islandora’s Ansible playbook comes with 2 preconfigured operating system defaults; Ubuntu 18.04 and CentOS/7. The deployment can be configured for a local VM or a production deployment. “Ansible is the simplest solution for configuration management available. It’s designed to be minimal in nature, consistent, secure and highly reliable, with an extremely low learning curve for administrators, developers and IT managers.” - [link]

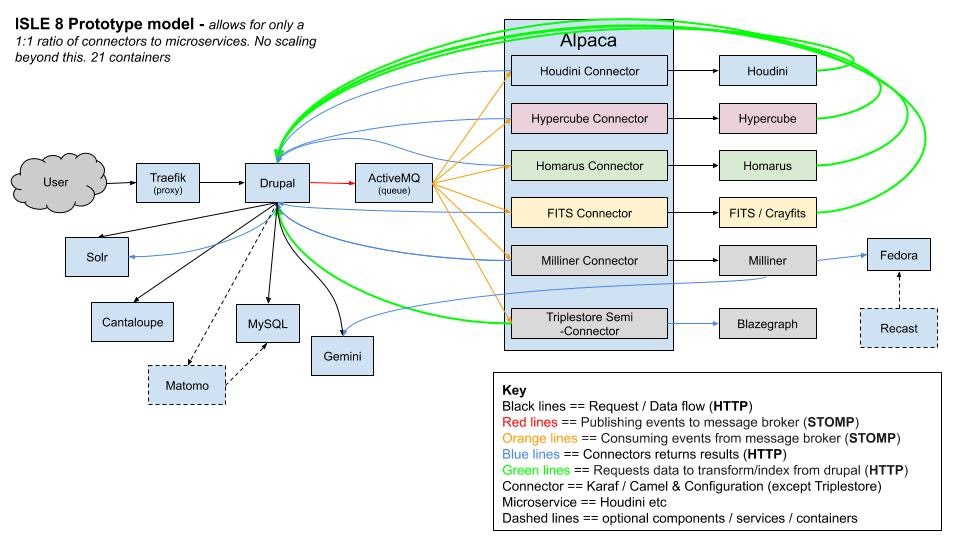

- ISLE Project facilitating creation and management of Islandora 8 Infrastructure with Docker (containerized server). This is bleeding edge technology for deployment. This would require some infrastructure and training to prepare our staff for this option.

- Full deploy and integrate with continuous development on Github[link]

- Full deploy and integrate with continuous development on Github[link]

- The stack can also be a standard manual development and deployment. Although there is nothing technically wrong with this option, it is generally considered time consuming to initially set up and generally considered relatively unnecessary. This has historically been Digital Initiative’s pattern of operations.

All of these options can utilize a continuous development (automated deployment) life cycle.

New Development Workflow Options

Docker Compose and Docker Desktop combined allows developers to deploy applications on Amazon ECS on AWS Fargate. This new functionality streamlines the process of deploying and managing containers in AWS from a local development environment running Docker. This means the act of deploying changes to production can be dramatically sped up.

Previously, taking a local Compose file and running it on Amazon ECS posed a challenge because of constructs in Amazon ECS that were not part of the Compose specification, but were necessary for the application to run in AWS. For example, in order to run a simple Compose file and deploy to Amazon ECS, a developer would first need to leave the Docker experience and configure an Amazon VPC, Amazon ECS Cluster, and Amazon ECS Task Definition to name just a few of the AWS resources needed. As part of the collaboration, developers can continue to leverage tools like the Docker CLI and Docker Compose without needing to setup those resources in AWS outside of the Docker experience because it is now handled natively. By creating and switching to a new context in Docker, a developer can simply issue an up command via Docker Compose, which will create those resources automatically in AWS. This provides an easy path for developers to deploy and run highly secure and scalable production applications in Amazon ECS.

While the new process is fully documented on the Docker blog and the GitHub repository, let’s explore the beta experience where we show a simple frontend web application using NGINX talking to a backend written in Go defined in our docker-compose.yaml file. In the beta, users will notice an additional ecs syntax in the docker commands. This is because we will leverage a plugin in the short term but for GA, the experience will be integrated with Docker Desktop and a developer can simply switch contexts to run their applications on Amazon ECS leveraging the Docker commands they are used to. As demonstrated below, the setup is straightforward where a user will define the AWS Profile, ECS cluster name, and the AWS Region where you want to deploy your applications. By simply issuing a docker ecs compose up, the application and the resources needed for it to run will be created in AWS!

For more information see From Docker Straight to AWS and Docker CLI plugin for Amazon ECS | Github